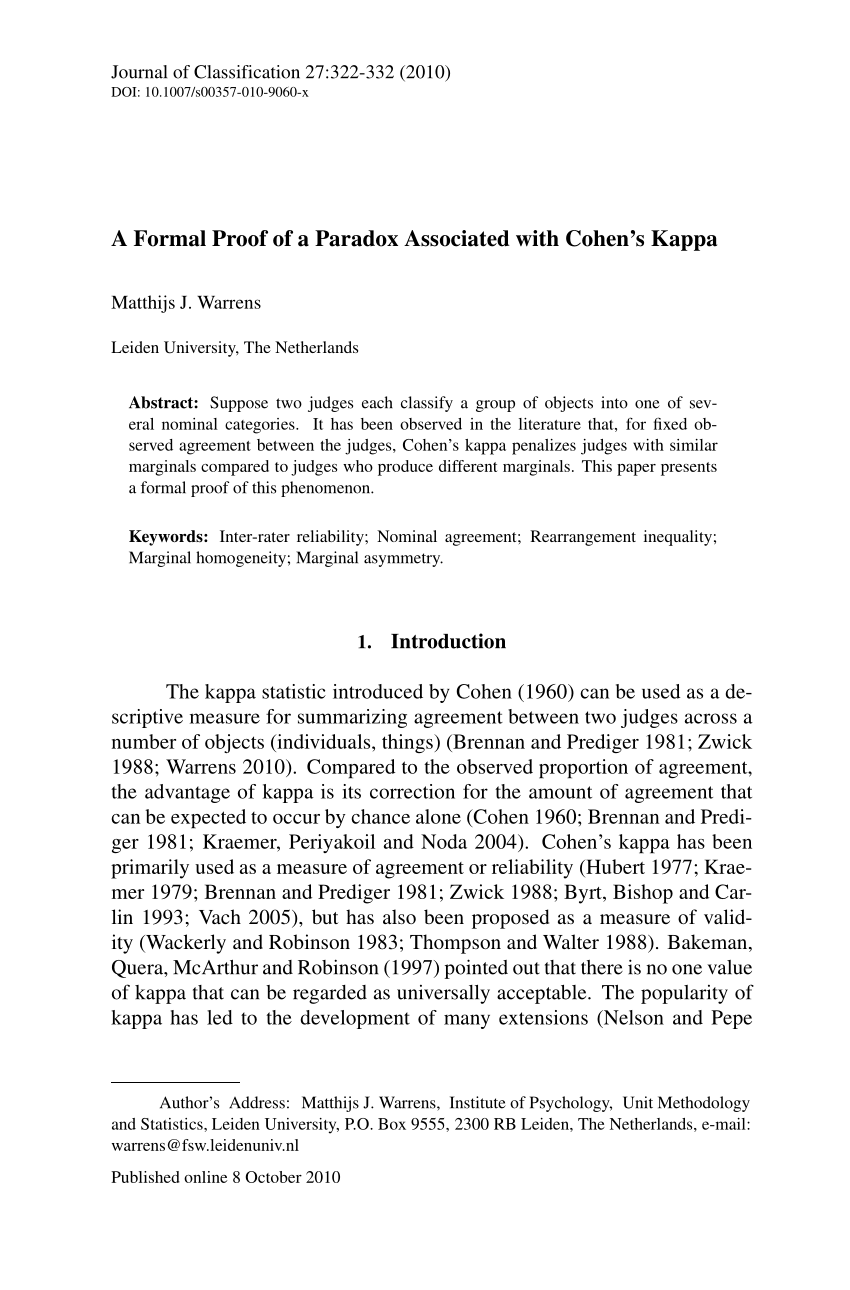

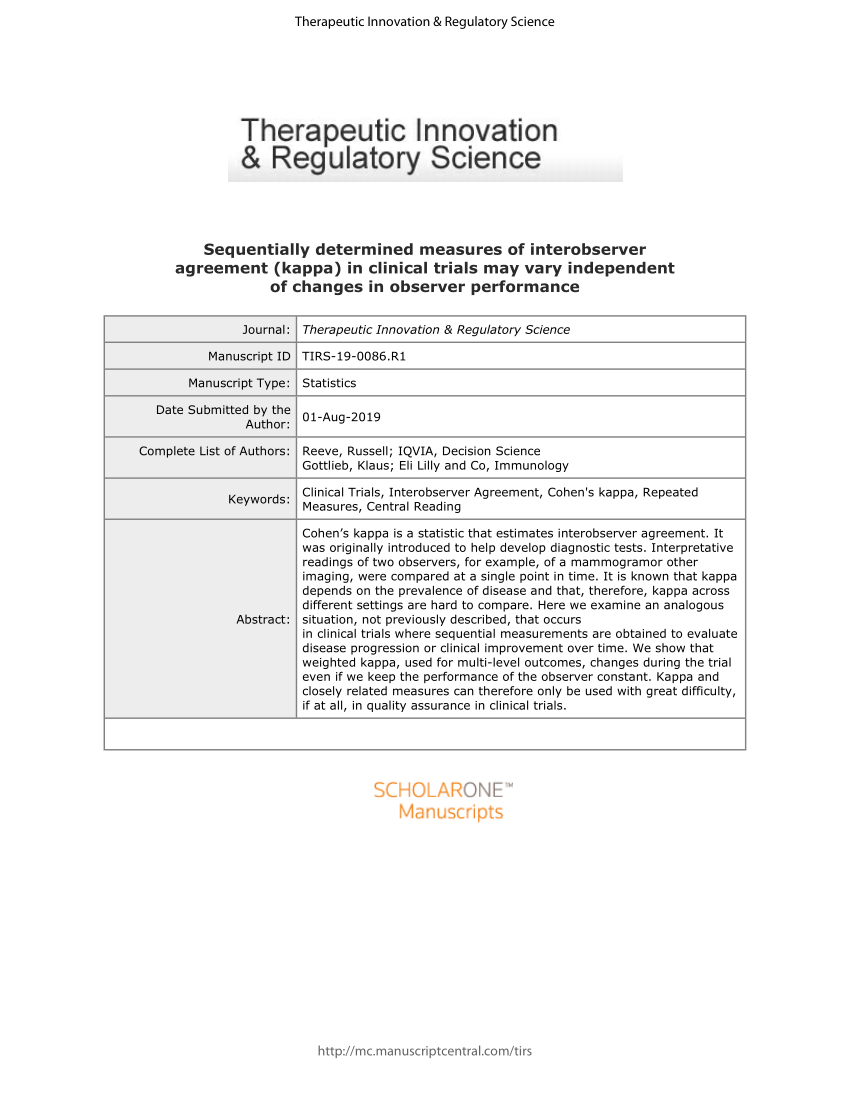

PDF) Sequentially Determined Measures of Interobserver Agreement (Kappa) in Clinical Trials May Vary Independent of Changes in Observer Performance

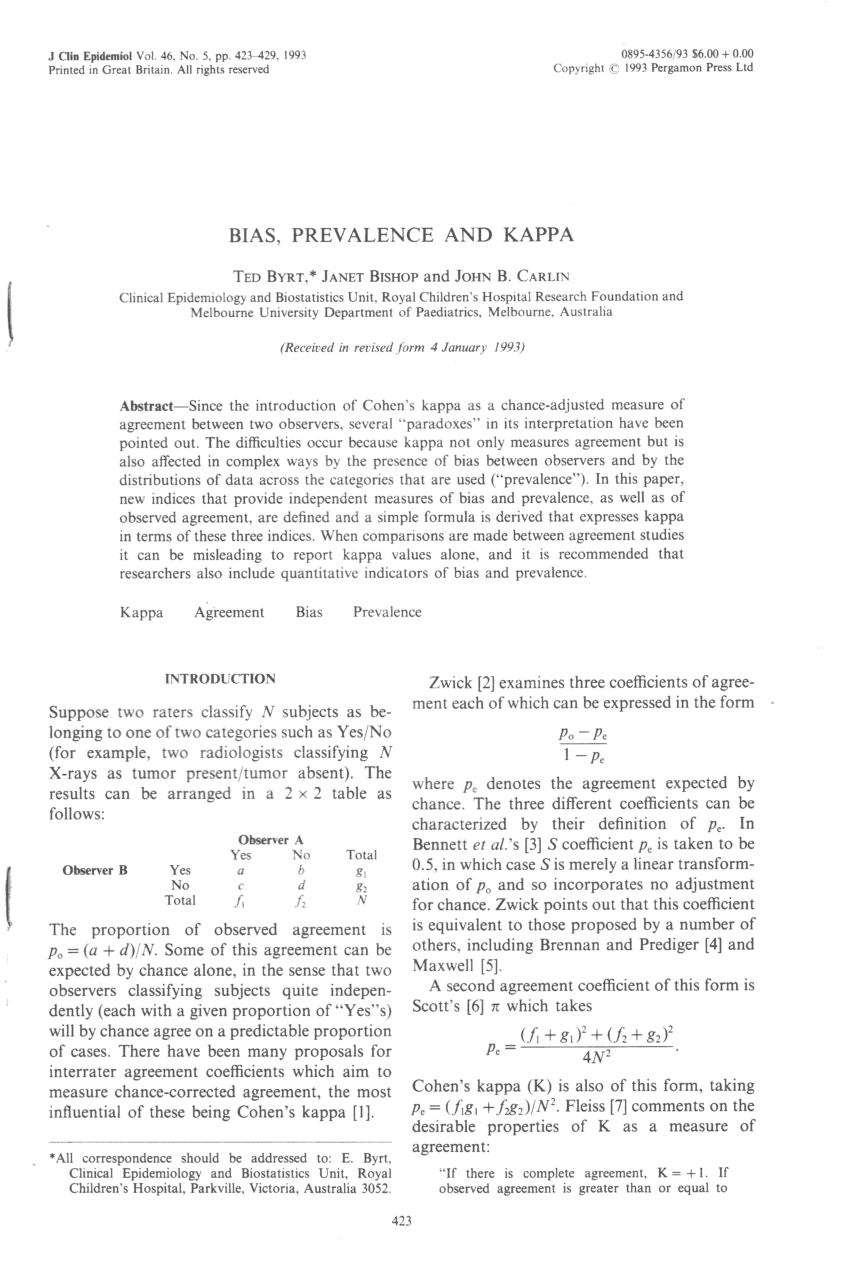

![PDF] More than Just the Kappa Coefficient: A Program to Fully Characterize Inter-Rater Reliability between Two Raters | Semantic Scholar PDF] More than Just the Kappa Coefficient: A Program to Fully Characterize Inter-Rater Reliability between Two Raters | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/79de97d630ca1ed5b1b529d107b8bb005b2a066b/2-Figure2-1.png)

PDF] More than Just the Kappa Coefficient: A Program to Fully Characterize Inter-Rater Reliability between Two Raters | Semantic Scholar

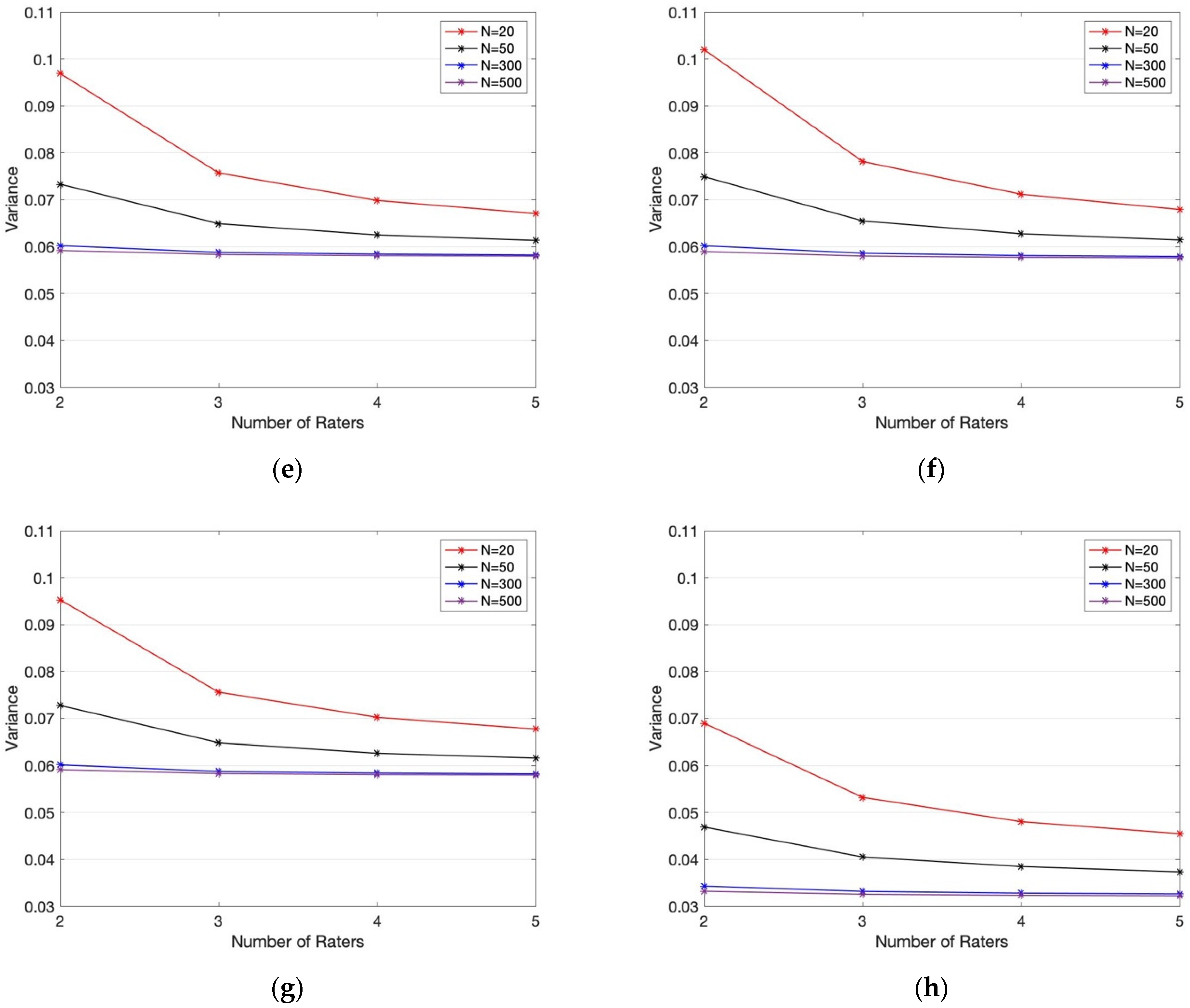

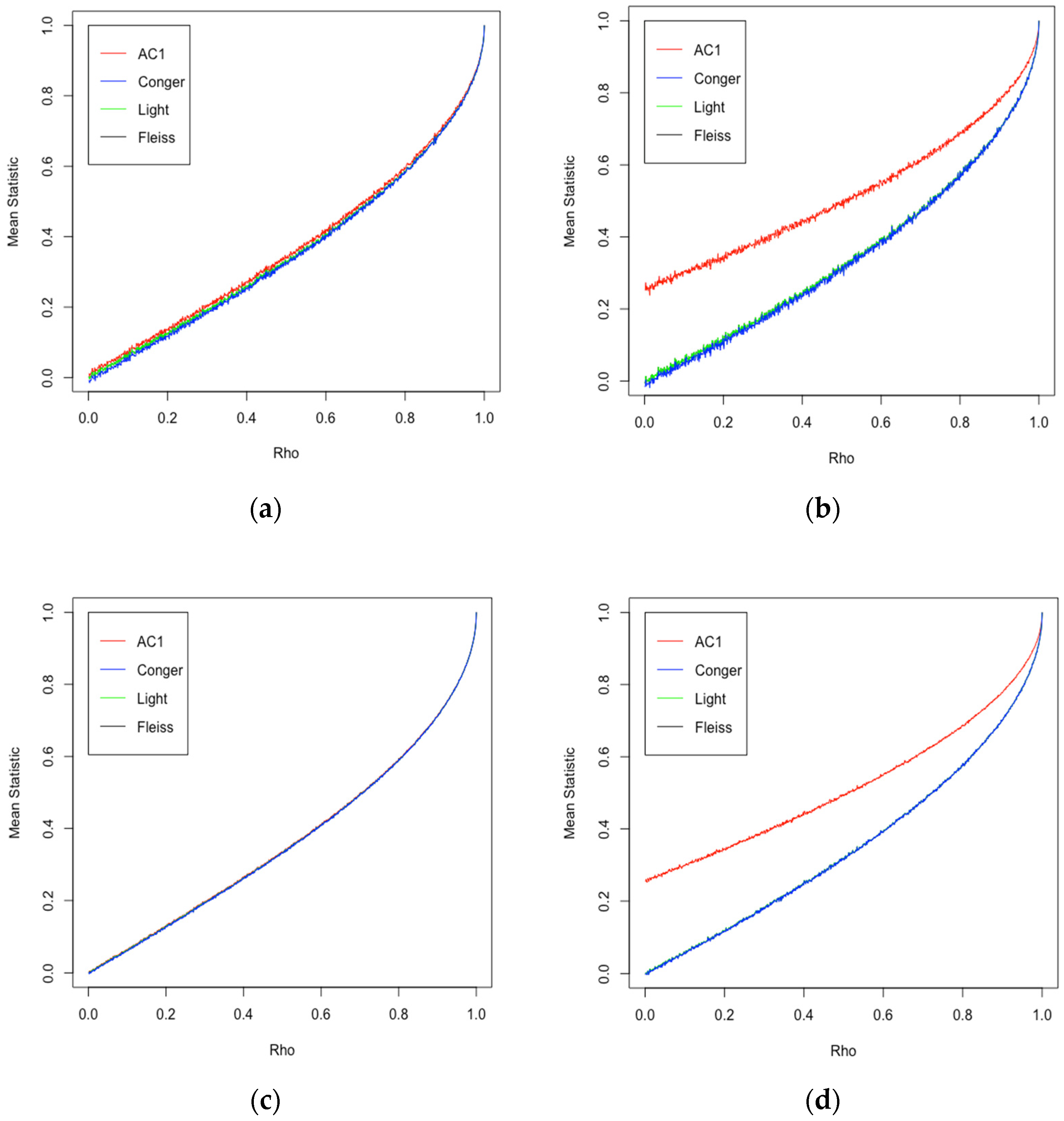

Symmetry | Free Full-Text | An Empirical Comparative Assessment of Inter-Rater Agreement of Binary Outcomes and Multiple Raters

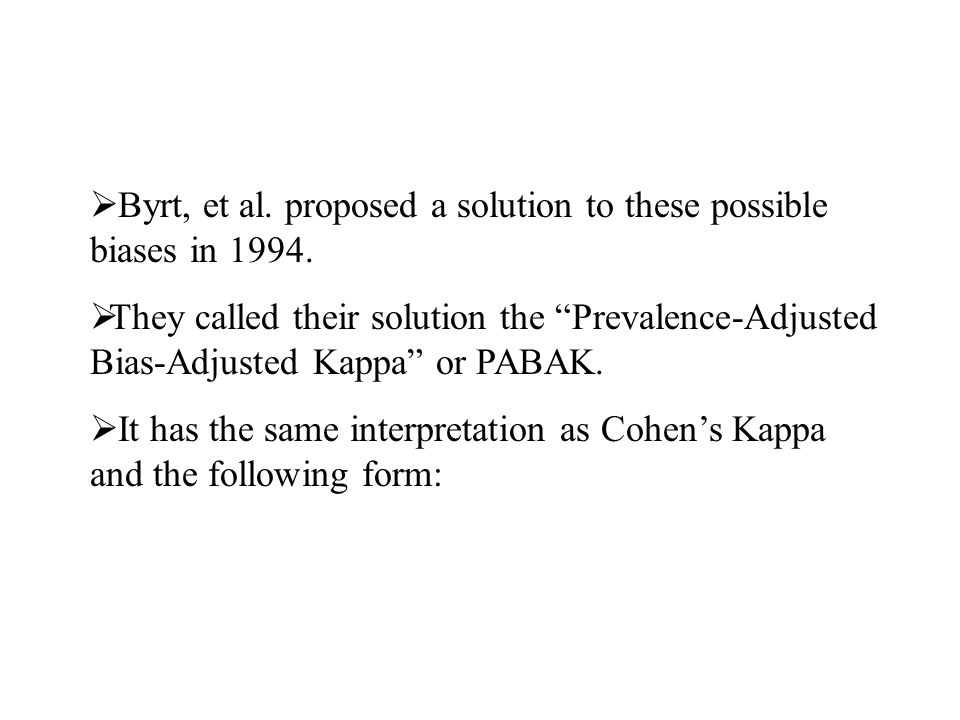

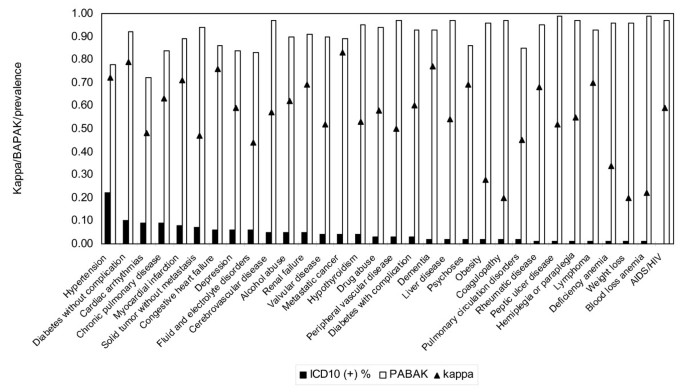

PDF) Measuring agreement of administrative data with chart data using prevalence unadjusted and adjusted kappa

Symmetry | Free Full-Text | An Empirical Comparative Assessment of Inter-Rater Agreement of Binary Outcomes and Multiple Raters

![PDF] More than Just the Kappa Coefficient: A Program to Fully Characterize Inter-Rater Reliability between Two Raters | Semantic Scholar PDF] More than Just the Kappa Coefficient: A Program to Fully Characterize Inter-Rater Reliability between Two Raters | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/79de97d630ca1ed5b1b529d107b8bb005b2a066b/1-Figure1-1.png)

PDF] More than Just the Kappa Coefficient: A Program to Fully Characterize Inter-Rater Reliability between Two Raters | Semantic Scholar

Measuring agreement of administrative data with chart data using prevalence unadjusted and adjusted kappa | BMC Medical Research Methodology | Full Text

Intra-Rater and Inter-Rater Reliability of a Medical Record Abstraction Study on Transition of Care after Childhood Cancer | PLOS ONE

Evidence Based Evaluation of Anal Dysplasia Screening : Ready for Prime Time? Wm. Christopher Mathews, MD San Diego AETC, UCSD Owen Clinic. - ppt download

242-2009: More than Just the Kappa Coefficient: A Program to Fully Characterize Inter-Rater Reliability between Two Raters

Sequentially Determined Measures of Interobserver Agreement (Kappa) in Clinical Trials May Vary Independent of Changes in Observ

PDF) Assessing the accuracy of species distribution models: prevalence, kappa and the true skill statistic (TSS) | Bin You - Academia.edu